The digital marketing landscape has evolved dramatically over the past decade, bringing with it both innovative opportunities and questionable shortcuts. Among these developments, CTR Bot SearchSEO has emerged as a controversial topic that continues to spark heated debates within the SEO community. As search engines become increasingly sophisticated in their ranking algorithms, some marketers have turned to automated solutions in hopes of gaming the system.

This comprehensive guide explores the world of search engine click bots, examining how they work, why they’re used, and what consequences await those who employ them. Whether you’re a curious digital marketer, a business owner evaluating your options, or simply someone trying to understand modern SEO tactics, this article will provide you with the factual information you need to make informed decisions.

Throughout this guide, we’ll maintain transparency about both the mechanics of these tools and the ethical considerations surrounding their use. Understanding how CTR manipulation works isn’t about encouraging its use—it’s about recognizing the landscape and making educated choices for sustainable online success.

Understanding CTR Bots and SearchSEO

Before diving into the complexities of automated clicking systems, we need to establish what we’re actually discussing. Click-through rate, commonly abbreviated as CTR, represents the percentage of people who click on a specific link after seeing it in search results. For instance, if your website appears 100 times in Google search results and receives 5 clicks, your CTR would be 5%.

This metric has long been considered a significant factor in how search engines evaluate content relevance. The logic seems straightforward: if users consistently click on a particular result, it likely provides value for that search query. This assumption has led some marketers down a path of artificial manipulation.

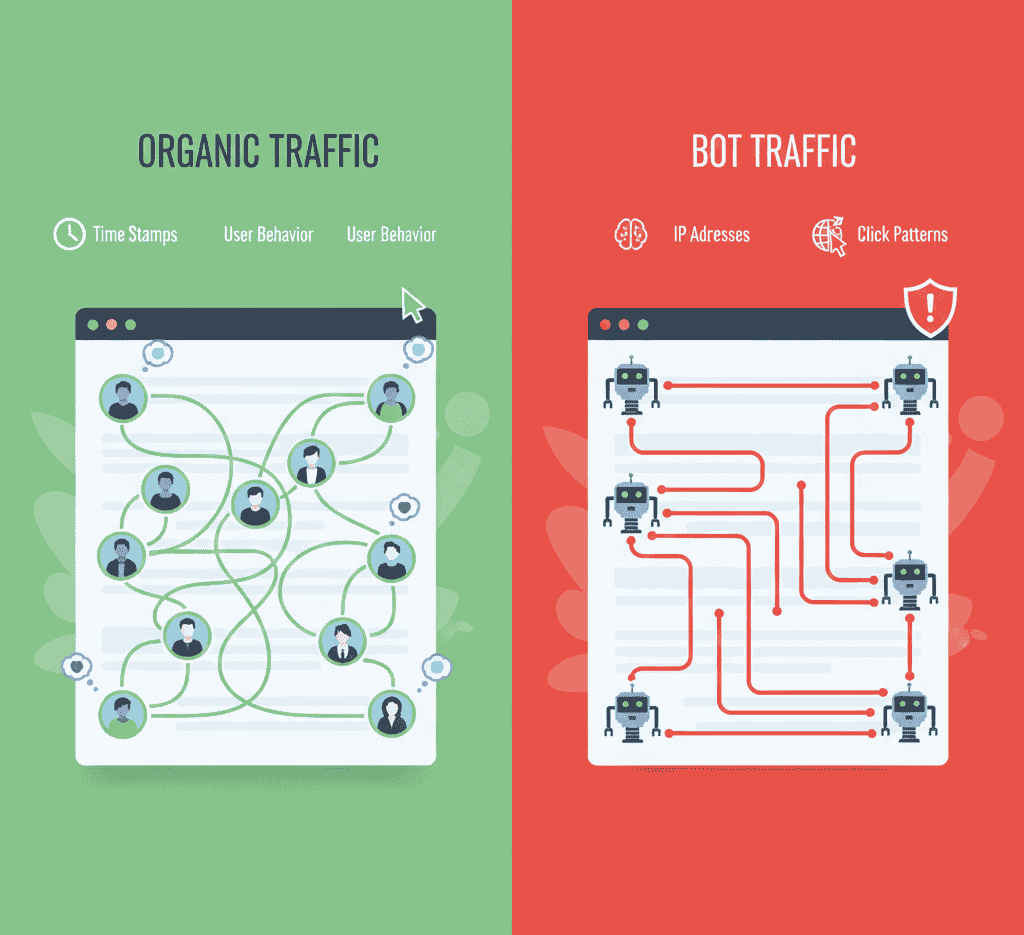

A CTR bot is essentially a software program designed to simulate human behavior in search engines. These bots perform automated searches for specific keywords, locate targeted websites in the results, and execute clicks to inflate engagement metrics artificially. The sophistication of these tools varies considerably—some operate with obvious robotic patterns, while others employ advanced techniques to mimic genuine user behavior.

The term “SearchSEO” in this context refers to the practice of using automated tools to manipulate search engine optimization metrics, particularly those related to user engagement. These systems don’t just click randomly; they’re programmed to follow specific patterns, spend time on pages, and sometimes even navigate through multiple pages of a website to create the illusion of authentic interest.

Modern click bots have evolved significantly from their primitive predecessors. Early versions were easily detectable, operating from single IP addresses and exhibiting repetitive, mechanical clicking patterns. Today’s versions are considerably more sophisticated, utilizing residential proxy networks, randomized timing intervals, and behavior patterns that closely mirror actual human browsing habits.

The technology typically involves multiple components working together: proxy servers to mask the bot’s origin, browser automation tools to simulate genuine interactions, and sophisticated algorithms that determine clicking patterns, dwell time, and navigation paths. Some advanced systems even incorporate machine learning to continuously adapt their behavior based on detection patterns.

How CTR Bot SearchSEO Works

Understanding the mechanics behind these systems reveals both their apparent sophistication and inherent limitations. The typical operation begins with keyword targeting—users specify which search terms they want to rank for and which URLs should receive clicks. The bot then initiates searches through various search engines, most commonly Google.

The automation process involves several critical steps. First, the bot must successfully access the search engine without triggering security measures. This requires rotating through different IP addresses, using residential proxies that appear to come from genuine internet service providers rather than data centers. Many systems maintain pools of thousands of IP addresses distributed across different geographic regions.

Once connected, the bot performs a search using the targeted keyword. Here’s where sophistication becomes crucial—a basic bot might immediately click the target result, but advanced systems introduce randomness. They might scroll through results, occasionally click on competing listings, or even refine their search query before eventually selecting the intended link.

After clicking through to the target website, the bot must demonstrate engagement. This means spending a realistic amount of time on the page, scrolling through content, and potentially clicking internal links. Some systems even simulate form fills or other interactions to strengthen the appearance of genuine interest. The goal is creating a behavioral fingerprint indistinguishable from an actual human visitor.

Browser fingerprinting presents another challenge these tools must overcome. Modern web analytics can detect numerous browser characteristics—screen resolution, installed plugins, operating system, language settings, and hundreds of other data points. Sophisticated bots must randomize these characteristics across sessions to avoid creating recognizable patterns.

The timing of these interactions matters enormously. Sending hundreds of clicks within minutes would immediately raise red flags. Instead, effective systems distribute their activity across hours or days, varying the time between searches and clicks to mirror natural traffic patterns. Some even account for typical human behavior, like reduced activity during nighttime hours in specific time zones.

Many CTR Bot SearchSEO systems also incorporate feedback loops. They monitor ranking changes and adjust their behavior accordingly, increasing or decreasing activity based on observed results. This adaptive approach attempts to find the “sweet spot” where manipulation remains effective without triggering detection algorithms.

Common Use Cases and Applications

Despite the risks involved, various parties have found reasons to experiment with automated clicking systems. Understanding these motivations provides insight into why this practice persists despite widespread condemnation from search engines and ethical SEO practitioners.

The most obvious application involves ranking manipulation. Website owners struggling to break into competitive search results sometimes turn to artificial CTR inflation, hoping to signal relevance to search algorithms. The reasoning follows that if their page suddenly shows strong engagement metrics, search engines might reward them with better positioning.

Competitor analysis represents another use case, though one that walks an ethical tightrope. Some businesses employ these tools to understand how click patterns affect rankings within their niche. They might test whether increased engagement on specific pages correlates with ranking improvements, using this data to inform their legitimate optimization strategies.

Local SEO presents unique applications for these systems. Businesses targeting specific geographic regions might use location-specific proxies to simulate local interest in their services. A restaurant in Chicago, for example, might generate clicks from Chicago-based IP addresses to strengthen their local search presence.

Interestingly, some companies have used similar technology for brand protection. When negative articles or reviews appear in search results, they might attempt to push these down by inflating engagement on more favorable content. While the intention might seem defensive rather than aggressive, this still constitutes manipulation.

Testing and experimentation form another category of use. SEO professionals curious about the role of CTR in ranking algorithms might conduct controlled experiments, though this ventures into ethically gray territory. Understanding how search engines respond to engagement signals can inform broader strategies, but using artificial means to gather this data remains problematic.

Some practitioners have advocated for using these tools on brand searches—instances where users specifically search for a company name. The argument suggests that since users already intend to find that business, artificially boosting those clicks merely accelerates an inevitable outcome. This rationalization, however, still involves deceiving search engines about genuine user behavior.

Risks and Consequences

The allure of quick ranking improvements evaporates quickly when confronted with the substantial risks involved. Google and other major search engines have explicitly stated their opposition to artificial engagement manipulation, and they’ve developed increasingly effective methods for detecting and penalizing such behavior.

Search engine penalties represent the most immediate and obvious risk. When detected, websites employing click bots may experience sudden ranking drops, sometimes plummeting from first-page visibility to complete obscurity. These penalties can target specific pages or entire domains, depending on the severity and scope of manipulation detected.

Google’s algorithm updates have increasingly focused on identifying inauthentic signals. Updates like Penguin, Panda, and various core updates have progressively refined the search engine’s ability to distinguish genuine user engagement from manufactured activity. Sites caught manipulating metrics often find themselves in a difficult recovery process that can take months or years.

Beyond algorithmic penalties, manual actions represent an even more serious consequence. Google’s webspam team can manually review sites suspected of manipulation and impose penalties that require direct appeals and demonstrated behavior changes to remove. These manual penalties often prove more difficult to reverse than algorithmic ones.

The financial implications extend beyond lost rankings. Businesses dependent on organic search traffic may see dramatic revenue declines following penalties. E-commerce sites, service providers, and content publishers relying on search visibility can experience devastating impacts on their bottom line. The cost of recovery—including potential rebranding, content development, and legitimate SEO work—typically far exceeds any investment in click bot services.

Reputational damage poses another significant risk. If competitors or industry observers discover that a business has employed such tactics, the resulting publicity can undermine trust and credibility. In today’s transparent digital environment, where information spreads rapidly through social media and industry forums, maintaining ethical standards becomes crucial for long-term reputation management.

Legal considerations also come into play. While using automated clicking tools isn’t explicitly illegal in most jurisdictions, it may violate terms of service agreements with search engines and advertising platforms. Businesses could potentially face lawsuits for unfair competition or fraudulent activity, particularly if their manipulation demonstrably harms competitors.

Perhaps most importantly, relying on shortcuts prevents businesses from developing the genuine value and authority that drives sustainable success. Time and resources spent on manipulation could instead build quality content, improve user experience, and develop legitimate visibility—investments that compound over time rather than collapsing under scrutiny.

Search Engine Detection Methods

Search engines have invested enormous resources in developing sophisticated detection systems capable of identifying artificial engagement patterns. Understanding these mechanisms reveals why manipulation attempts so frequently fail and why the risk-reward calculation rarely favors using automated clicking tools.

Machine learning algorithms form the foundation of modern detection systems. These algorithms analyze billions of data points daily, learning to recognize patterns that distinguish genuine human behavior from bot activity. They examine click patterns, browsing behavior, engagement metrics, and countless other signals to build probabilistic models of authentic user interaction.

Behavioral analysis goes beyond simple click counting. Search engines track how users interact with results—do they immediately bounce back to search after clicking? How long do they spend on pages? Do they engage with content through scrolling, clicking internal links, or other interactions? Bots struggle to replicate the natural variability and purposefulness of human behavior.

Click pattern anomalies provide obvious red flags. When a website suddenly receives an unusual spike in clicks from specific keywords, particularly if those clicks exhibit suspicious characteristics like similar dwell times or geographic clustering, detection systems take notice. Natural traffic growth typically shows gradual, organic patterns rather than artificial spikes.

Browser fingerprinting technology allows search engines to identify individual browsing sessions with remarkable accuracy. By analyzing hundreds of browser characteristics—from screen resolution and installed fonts to WebGL rendering capabilities and audio context properties—they create unique identifiers for each visitor. When multiple “different” visitors share identical fingerprints, it suggests bot activity.

Network analysis reveals connections between seemingly unrelated clicks. If multiple searches originate from IP addresses within the same subnet or data center range, or if they share other network characteristics, this suggests coordinated bot activity rather than independent user searches.

Velocity checking monitors how quickly actions occur. Human users require time to read search results, evaluate options, and make clicking decisions. Bots often exhibit superhuman speed, moving from search to click in fractions of seconds. Even sophisticated bots that introduce delays sometimes fail to match the natural rhythm and variability of human decision-making.

Engagement quality assessment examines what happens after clicks occur. Do visitors engage meaningfully with content, or do they exhibit shallow, formulaic interaction patterns? Search engines can detect when “users” consistently spend exactly 30 seconds on pages, scroll at mechanically consistent speeds, or follow identical navigation paths.

Cross-platform correlation allows search engines to verify user authenticity across multiple touchpoints. When someone searches on mobile, clicks through, and later returns via desktop while logged into their Google account, this creates a verified user trail. Bots rarely replicate these complex, interconnected behavioral patterns.

Legitimate Alternatives to CTR Bots

Rather than risking penalties through manipulation, businesses should focus on proven strategies that genuinely improve click-through rates through quality and relevance. These white-hat approaches not only avoid detection risks but actually deliver sustainable, long-term value.

Title tag optimization represents one of the most impactful legitimate improvements. Your title appears as the clickable headline in search results, making it your primary opportunity to attract clicks. Effective titles clearly communicate content value, include relevant keywords naturally, and create curiosity or urgency without resorting to clickbait. Testing different title formulations and monitoring their performance allows continuous refinement.

Meta descriptions, while not direct ranking factors, significantly influence click decisions. These brief summaries appear below titles in search results, providing context about page content. Compelling descriptions highlight unique value propositions, address user intent directly, and include clear calls to action. Though Google sometimes rewrites descriptions based on specific queries, optimizing these elements still matters.

Rich snippets and structured data markup enable your content to appear with enhanced visual elements in search results. Star ratings, pricing information, event details, recipe components, and other structured data can make your listings more prominent and informative. Implementing schema markup according to official guidelines helps search engines understand and display your content more effectively.

Content quality improvements naturally drive better engagement. When your content genuinely addresses user needs better than alternatives, people spend more time engaging, share it more frequently, and return more often. These authentic engagement signals carry far more weight than any manufactured metrics. Focus on depth, accuracy, originality, and practical value.

Page experience optimization ensures that users who do click through have positive experiences that encourage engagement. Fast loading times, mobile responsiveness, intuitive navigation, and accessible design all contribute to satisfaction that translates into positive behavioral signals search engines can measure.

Understanding search intent allows you to match content to user expectations more precisely. When someone searches for “how to fix a leaky faucet,” they want step-by-step instructions, not a sales page for plumbing services. Aligning content with intent ensures that visitors find what they expect, naturally improving engagement metrics.

Brand building through consistent quality establishes trust that improves CTR over time. Users who recognize your brand from previous positive experiences are more likely to click your results in future searches. This earned advantage comes from sustained value delivery rather than manipulation.

Internal linking and site structure optimization encourage visitors to explore multiple pages, naturally increasing engagement depth. When users find your content valuable and can easily discover related information, they spend more time on your site and view more pages—signals that genuinely indicate quality.

The Future of CTR and Search Engine Algorithms

The landscape of search engine optimization continues evolving rapidly, with implications for both legitimate practitioners and those tempted by shortcuts. Understanding these trends helps contextualize why manipulation becomes increasingly futile.

Artificial intelligence and machine learning capabilities grow exponentially more sophisticated. Modern search algorithms can process and understand content with near-human comprehension, evaluating quality, relevance, and value far beyond simple keyword matching or engagement metrics. As these systems improve, they become progressively better at identifying genuine value versus artificial signals.

User experience signals extend beyond simple clicks. Search engines increasingly evaluate how satisfied users appear with results based on comprehensive behavioral analysis. Do they complete their apparent goals? Do they return to search for the same information, suggesting the first result was inadequate? These nuanced signals prove much harder to manipulate than basic CTR.

The rise of zero-click searches—where users find answers directly in search results without clicking through—fundamentally changes how we think about search success. Featured snippets, knowledge panels, and direct answers mean that ranking alone no longer guarantees traffic. This shift emphasizes providing genuine value that earns visibility rather than gaming specific metrics.

Voice search and conversational AI introduce new paradigms where traditional CTR becomes less relevant. When users ask voice assistants questions and receive spoken answers, there’s no click to manipulate. Success in this environment depends entirely on being recognized as the most authoritative source for specific information.

Personalization algorithms increasingly tailor results to individual users based on their history, preferences, and context. This makes ranking manipulation less meaningful—even if you successfully inflate engagement signals, search engines might still prioritize different results for different users based on what’s genuinely most relevant to them.

Real-time quality assessment allows search engines to quickly adjust rankings based on immediate user feedback. Rather than waiting for periodic algorithm updates, modern systems can respond dynamically to engagement patterns, making it harder for manipulation to gain sustained traction before detection occurs.

FAQ Section

Is using CTR Bot SearchSEO legal?

Using automated clicking systems exists in a legal gray area. While not explicitly illegal in most jurisdictions, it violates search engine terms of service and could potentially expose users to lawsuits for unfair competition or fraudulent activity. Beyond legal considerations, the practice clearly violates ethical standards within the digital marketing community.

Can search engines detect CTR bots?

Yes, modern search engines employ sophisticated detection methods that successfully identify the vast majority of bot traffic. Machine learning algorithms analyze behavioral patterns, browser fingerprints, network characteristics, and engagement quality to distinguish genuine users from automated systems. Detection capabilities continue improving as search engines invest heavily in combating manipulation.

What happens if caught using click bots?

Consequences range from algorithmic ranking penalties to manual actions that require direct appeals. Affected websites may experience dramatic traffic losses, revenue declines, and long-term reputational damage. Recovery typically requires demonstrating sustained ethical behavior and may take months or years.

Are there safe CTR improvement methods?

Absolutely. Optimizing title tags, meta descriptions, and structured data; improving content quality; enhancing page experience; and building brand recognition all naturally improve CTR without manipulation risks. These white-hat approaches deliver sustainable results that compound over time.

How much does CTR actually affect rankings?

While CTR likely serves as one among hundreds of ranking signals, its exact weight remains speculative. Search engines deliberately maintain ambiguity about specific ranking factors to prevent manipulation. Rather than fixating on individual metrics, focus on delivering genuine value that naturally generates positive signals across multiple dimensions.

What’s the difference between CTR bots and traffic bots?

CTR bots specifically target search engine results, simulating users who search for keywords and click specific listings. Traffic bots more broadly generate visits to websites through various methods—direct URL visits, referral links, or social media. Both involve artificial traffic generation but serve slightly different manipulation purposes.

Conclusion

The world of search engine click bots represents a cautionary tale about shortcuts in digital marketing. While the temptation to artificially boost engagement metrics might seem appealing, particularly when facing competitive pressure or slow organic growth, the risks far outweigh any potential benefits.

Search engines have demonstrated remarkable sophistication in detecting manipulation attempts, and their capabilities continue advancing. The temporary ranking gains some might achieve through artificial CTR inflation pale compared to the catastrophic consequences of detection—lost rankings, damaged reputation, and wasted resources that could have built sustainable success.

More fundamentally, relying on manipulation distracts from the core principle that should guide all digital marketing efforts: creating genuine value for users. When you focus on understanding audience needs, delivering exceptional content and experiences, and building authentic authority in your field, you develop visibility that withstands algorithm changes and competitive pressure.

The future of search optimization clearly trends toward rewarding authenticity and penalizing manipulation. As artificial intelligence grows more capable of evaluating quality and relevance, the window for gaming systems continues closing. Businesses that invest in legitimate strategies position themselves for long-term success, while those chasing shortcuts face increasingly certain detection and penalties.

For anyone considering automated clicking tools, the message should be clear: don’t. Channel that energy and investment into building something genuinely valuable instead. Your future self will thank you when you’ve developed sustainable organic visibility rather than facing the consequences of a shortcut that never really worked.